Redis4.x新特性 -- 萌萌的MEMORY DOCTOR,redis4.xdoctor

Redis4.x版本去年发布以后,新增了许多新的功能特性。大致翻看下来,一个叫MEMORY DOCTOR的命令吸引了我的注意。MEMORY DOCTOR命令是Redis4.x版本新增MEMORY 命令下的一个子命令,它可以通过诊断给出关于redis内存使用方面的建议,在不同的状态下会有不同的分析结果。此时我的脑海里第一个闪过念头:最强AI?redis是不是通过什么复杂的人工智能算法,对其使用的内存状况做了全方位的分析进而给出了最合理的优化建议?它是不是和AlphaGo一样通过学习会变得越来越强?伴随着疑问我尝试的从github上找了找相关的源码,最后知道真相的我眼泪掉来,不得不说MEMORY DOCTOR的实现真的挺萌的,萌的可爱。什么最强AI,我上我也行呀。

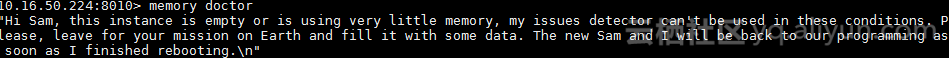

使用展示

源码分析

MEMORY DOCTOR功能源码地址 https://github.com/antirez/redis/blob/unstable/src/object.c

实现逻辑全在1080行开始的getMemoryDoctorReport方法中

/* This implements MEMORY DOCTOR. An human readable analysis of the Redis

* memory condition. */

sds getMemoryDoctorReport(void) {

int empty = 0; /* Instance is empty or almost empty. */

int big_peak = 0; /* Memory peak is much larger than used mem. */

int high_frag = 0; /* High fragmentation. */

int high_alloc_frag = 0;/* High allocator fragmentation. */

int high_proc_rss = 0; /* High process rss overhead. */

int high_alloc_rss = 0; /* High rss overhead. */

int big_slave_buf = 0; /* Slave buffers are too big. */

int big_client_buf = 0; /* Client buffers are too big. */

int many_scripts = 0; /* Script cache has too many scripts. */

int num_reports = 0;

struct redisMemOverhead *mh = getMemoryOverheadData();

if (mh->total_allocated < (1024*1024*5)) {

empty = 1;

num_reports++;

} else {

/* Peak is > 150% of current used memory? */

if (((float)mh->peak_allocated / mh->total_allocated) > 1.5) {

big_peak = 1;

num_reports++;

}

/* Fragmentation is higher than 1.4 and 10MB ?*/

if (mh->total_frag > 1.4 && mh->total_frag_bytes > 10<<20) {

high_frag = 1;

num_reports++;

}

/* External fragmentation is higher than 1.1 and 10MB? */

if (mh->allocator_frag > 1.1 && mh->allocator_frag_bytes > 10<<20) {

high_alloc_frag = 1;

num_reports++;

}

/* Allocator fss is higher than 1.1 and 10MB ? */

if (mh->allocator_rss > 1.1 && mh->allocator_rss_bytes > 10<<20) {

high_alloc_rss = 1;

num_reports++;

}

/* Non-Allocator fss is higher than 1.1 and 10MB ? */

if (mh->rss_extra > 1.1 && mh->rss_extra_bytes > 10<<20) {

high_proc_rss = 1;

num_reports++;

}

/* Clients using more than 200k each average? */

long numslaves = listLength(server.slaves);

long numclients = listLength(server.clients)-numslaves;

if (mh->clients_normal / numclients > (1024*200)) {

big_client_buf = 1;

num_reports++;

}

/* Slaves using more than 10 MB each? */

if (numslaves > 0 && mh->clients_slaves / numslaves > (1024*1024*10)) {

big_slave_buf = 1;

num_reports++;

}

/* Too many scripts are cached? */

if (dictSize(server.lua_scripts) > 1000) {

many_scripts = 1;

num_reports++;

}

}

sds s;

if (num_reports == 0) {

s = sdsnew(

"Hi Sam, I can't find any memory issue in your instance. "

"I can only account for what occurs on this base.\n");

} else if (empty == 1) {

s = sdsnew(

"Hi Sam, this instance is empty or is using very little memory, "

"my issues detector can't be used in these conditions. "

"Please, leave for your mission on Earth and fill it with some data. "

"The new Sam and I will be back to our programming as soon as I "

"finished rebooting.\n");

} else {

s = sdsnew("Sam, I detected a few issues in this Redis instance memory implants:\n\n");

if (big_peak) {

s = sdscat(s," * Peak memory: In the past this instance used more than 150% the memory that is currently using. The allocator is normally not able to release memory after a peak, so you can expect to see a big fragmentation ratio, however this is actually harmless and is only due to the memory peak, and if the Redis instance Resident Set Size (RSS) is currently bigger than expected, the memory will be used as soon as you fill the Redis instance with more data. If the memory peak was only occasional and you want to try to reclaim memory, please try the MEMORY PURGE command, otherwise the only other option is to shutdown and restart the instance.\n\n");

}

if (high_frag) {

s = sdscatprintf(s," * High total RSS: This instance has a memory fragmentation and RSS overhead greater than 1.4 (this means that the Resident Set Size of the Redis process is much larger than the sum of the logical allocations Redis performed). This problem is usually due either to a large peak memory (check if there is a peak memory entry above in the report) or may result from a workload that causes the allocator to fragment memory a lot. If the problem is a large peak memory, then there is no issue. Otherwise, make sure you are using the Jemalloc allocator and not the default libc malloc. Note: The currently used allocator is \"%s\".\n\n", ZMALLOC_LIB);

}

if (high_alloc_frag) {

s = sdscatprintf(s," * High allocator fragmentation: This instance has an allocator external fragmentation greater than 1.1. This problem is usually due either to a large peak memory (check if there is a peak memory entry above in the report) or may result from a workload that causes the allocator to fragment memory a lot. You can try enabling 'activedefrag' config option.\n\n");

}

if (high_alloc_rss) {

s = sdscatprintf(s," * High allocator RSS overhead: This instance has an RSS memory overhead is greater than 1.1 (this means that the Resident Set Size of the allocator is much larger than the sum what the allocator actually holds). This problem is usually due to a large peak memory (check if there is a peak memory entry above in the report), you can try the MEMORY PURGE command to reclaim it.\n\n");

}

if (high_proc_rss) {

s = sdscatprintf(s," * High process RSS overhead: This instance has non-allocator RSS memory overhead is greater than 1.1 (this means that the Resident Set Size of the Redis process is much larger than the RSS the allocator holds). This problem may be due to Lua scripts or Modules.\n\n");

}

if (big_slave_buf) {

s = sdscat(s," * Big replica buffers: The replica output buffers in this instance are greater than 10MB for each replica (on average). This likely means that there is some replica instance that is struggling receiving data, either because it is too slow or because of networking issues. As a result, data piles on the master output buffers. Please try to identify what replica is not receiving data correctly and why. You can use the INFO output in order to check the replicas delays and the CLIENT LIST command to check the output buffers of each replica.\n\n");

}

if (big_client_buf) {

s = sdscat(s," * Big client buffers: The clients output buffers in this instance are greater than 200K per client (on average). This may result from different causes, like Pub/Sub clients subscribed to channels bot not receiving data fast enough, so that data piles on the Redis instance output buffer, or clients sending commands with large replies or very large sequences of commands in the same pipeline. Please use the CLIENT LIST command in order to investigate the issue if it causes problems in your instance, or to understand better why certain clients are using a big amount of memory.\n\n");

}

if (many_scripts) {

s = sdscat(s," * Many scripts: There seem to be many cached scripts in this instance (more than 1000). This may be because scripts are generated and `EVAL`ed, instead of being parameterized (with KEYS and ARGV), `SCRIPT LOAD`ed and `EVALSHA`ed. Unless `SCRIPT FLUSH` is called periodically, the scripts' caches may end up consuming most of your memory.\n\n");

}

s = sdscat(s,"I'm here to keep you safe, Sam. I want to help you.\n");

}

freeMemoryOverheadData(mh);

return s;

}通过阅读源码,我们不难发现redis会从Instance is empty or not,Memory used peak,High fragmentation,High allocator fragmentation,High process rss overhead,High rss overhead,Slave buffers are too big,Client buffers are too big,Script cache has too many 这八个维度来分析一个redis节点的内存使用状况,每个维度都有相应的触发条件,然后再分别给每个维度提出对应解决建议,具体见下表:

| 维度 | 触发条件 | 建议 |

|---|---|---|

| Instance is empty or almost empty | 节点内存空间使用小于5M | 无 |

| Memory peak is much larger | 节点在过去使用内存的峰值超过150%的当前内存 | 导致内存碎片率较大,但这它是无害的,可以手动执行MEMORY PURGE命令或者重启节点 |

| High fragmentation | memory_fragmentation 和 RSS memory之和大于1.4 | 通常是由于工作负载导致分配器大量分段内存造成的,请确保使用的内存分配器是Jemalloc |

| High allocator fragmentation | 节点的allocator external fragmentation大于1.1 | 建议尝试启用"activedefrag"配置选项开启自动内存碎片整理 |

| High rss overhead | 节点的non-allocator RSS memory开销大于1.1 | 意味着Redis进程的驻留集大小远大于分配器保存的RSS,通常可能是由于Lua脚本或模块化功能引起的 |

| High process rss overhead | 节点的RSS memory大于1.1 | 此通常是由于峰值内存较大,可以尝试使用MEMORY PURGE命令来回收它 |

| Slave buffers are too big | 节点的output buffers对于每个replica(平均)大于10MB | 一些replica正在努力接收数据,要么是因为它太慢,要么是因为网络问题.可以使用INFO输出来检查副本延迟.并使用CLIENT LIST命令检查每个副本的输出缓冲区 |

| Client buffers are too big | 节点的 clients output buffers大于每个客户端200K(平均) | 可能是由于不同的原因造成的,使用CLIENT LIST命令调查问题 |

| Script cache has too many | 节点中缓存脚超过1000个 | 定期调用SCRIPT FLUSH命令 |

到此为止,我们应该彻底弄清楚了MEMORY DOCTOR的实现机制了,它到底在什么情况下会给相应的诊断意见。虽然他的实现有那么些许的萌萌哒,但又显得那么的合情合理。后续我们的RedisManager会基于MEMORY DOCTOR命令增加4.0以上集群的健康诊断功能。

些许想法

1.MEMORY DOCTOR这几个对于内存状况维度分析的指标和执行info命令得到的结果如此的吻合,对于3.0甚至2.8版本那些不支持MEMORY DOCTOR命令的redis版本,我们是不是自己也可以实现一套内存诊断机制,给出对应的节点解决方案?

2.诊断意见中的那个Sam到底是谁。。