hive安装,

Hive只在一个节点上安装即可

1.上传tar包

2.解压

tar -zxvf hive-0.9.0.tar.gz -C /cloud/

3.安装mysql数据库(切换到root用户)(装在哪里没有限制,只有能联通hadoop集群的节点)

mysql安装仅供参考,不同版本mysql有各自的安装流程

rpm -qa | grep mysql

rpm -e mysql-libs-5.1.66-2.el6_3.i686 --nodeps

rpm -ivh MySQL-server-5.1.73-1.glibc23.i386.rpm

rpm -ivh MySQL-client-5.1.73-1.glibc23.i386.rpm

修改mysql的密码

/usr/bin/mysql_secure_installation

(注意:删除匿名用户,允许用户远程连接)

登陆mysql

mysql -u root -p

4.配置hive

(a)配置HIVE_HOME环境变量 vi conf/hive-env.sh 配置其中的$hadoop_home

(b)配置元数据库信息 vi hive-site.xml

添加如下内容:

<configuration>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://localhost:3306/hive?createDatabaseIfNotExist=true</value>

<description>JDBC connect string for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

<description>Driver class name for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

<description>username to use against metastore database</description>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>root</value>

<description>password to use against metastore database</description>

</property>

</configuration>

5.安装hive和mysq完成后,将mysql的连接jar包拷贝到$HIVE_HOME/lib目录下

如果出现没有权限的问题,在mysql授权(在安装mysql的机器上执行)

mysql -uroot -p

#(执行下面的语句 *.*:所有库下的所有表 %:任何IP地址或主机都可以连接)

GRANT ALL PRIVILEGES ON *.* TO 'root'@'%' IDENTIFIED BY 'root' WITH GRANT OPTION;

FLUSH PRIVILEGES;

6. Jline包版本不一致的问题,需要拷贝hive的lib目录中jline.2.12.jar的jar包替换掉hadoop中的

/home/hadoop/app/hadoop-2.6.4/share/hadoop/yarn/lib/jline-0.9.94.jar

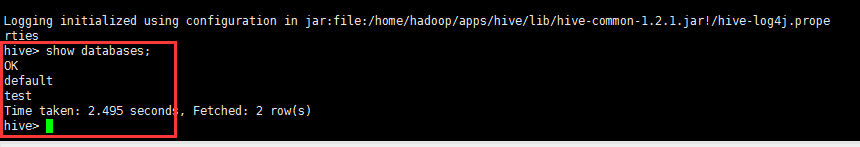

启动hive

bin/hive

----------------------------------------------------------------------------------------------------

6.建表(默认是内部表)

create table trade_detail(id bigint, account string, income double, expenses double, time string) row format delimited fields terminated by '\t';

建分区表

create table td_part(id bigint, account string, income double, expenses double, time string) partitioned by (logdate string) row format delimited fields terminated by '\t';

建外部表

create external table td_ext(id bigint, account string, income double, expenses double, time string) row format delimited fields terminated by '\t' location '/td_ext';

7.创建分区表

普通表和分区表区别:有大量数据增加的需要建分区表

create table book (id bigint, name string) partitioned by (pubdate string) row format delimited fields terminated by '\t';

分区表加载数据

load data local inpath './book.txt' overwrite into table book partition (pubdate='2010-08-22');

load data local inpath '/root/data.am' into table beauty partition (nation="USA");

select nation, avg(size) from beauties group by nation order by avg(size);

本文转自yushiwh 51CTO博客,原文链接:http://blog.51cto.com/yushiwh/1924808,如需转载请自行联系原作者